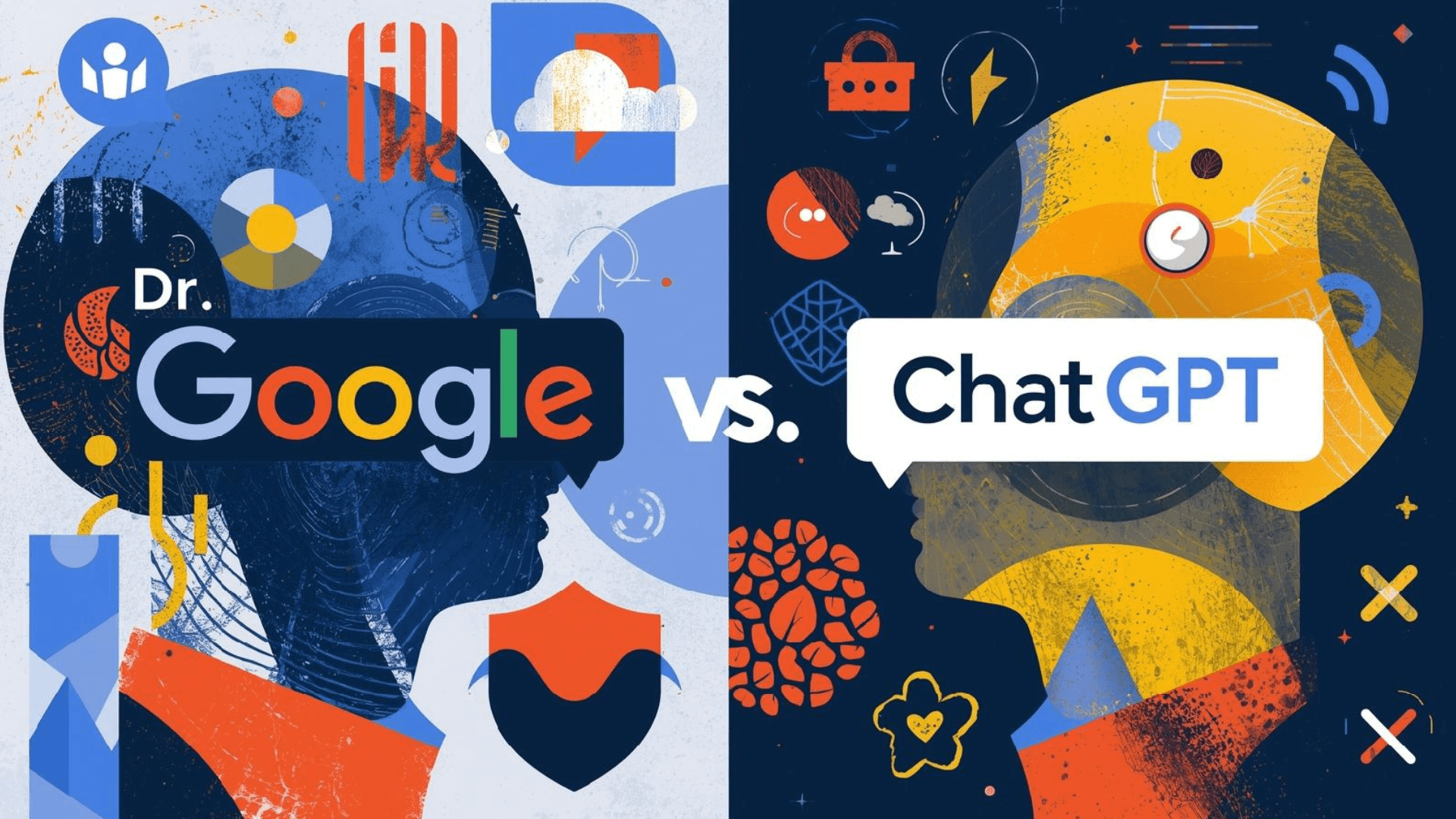

Dr. Google vs. ChatGPT: Revolutionizing Health Advice with AI & Cloud Security

Stop Googling Symptoms. Start Conversing.

Remember the panic?

You feel a twinge.

You type it into the search bar.

Suddenly, you have a rare tropical disease.

We’ve all been there.

Enter the new era.

Dr. Google is out.

ChatGPT is in.

The shift is massive.

We are moving from keyword matching to contextual understanding.

It’s not just about finding links anymore.

It’s about personalized health information AI.

But there is a catch.

A big one.

Accuracy and hallucinations.

For IT professionals and DevOps engineers, this is critical.

It’s not just health data.

It’s data security.

We see a revolution in how we access care.

But we also see risks.

Is the advice accurate?

Is your data secure?

Can you trust a chatbot with your life?

The stakes are high.

You need a partner who understands the cloud.

Devolity Business Solutions knows this landscape.

We secure the infrastructure that powers these AI models.

We bridge the gap between innovation and safety.

In this deep dive, we compare experiences.

Dr. Google vs ChatGPT health experiences.

We analyze accuracy.

We test privacy.

We look at the future of secure health AI.

Let’s get started. 🚀

The Evolution of Digital Health Search (Dr. Google)

The Keyword Era

For decades, “Dr. Google” ruled.

It was simple.

Type “headache and fatigue.”

Get 50 million results.

The algorithm matched keywords.

It didn’t understand you.

It didn’t know your history.

It just knew strings of text.

The Problem with Links

You click the first link.

It’s a forum from 2005.

You click the second.

It’s a WebMD article about brain tumors.

The anxiety spikes.

This is “cyberchondria.”

Traditional search is passive.

It dumps information on you.

It forces you to filter it.

And we are bad filters.

Why Dr. Google Fails:

- No Context: It treats every search as new.

- SEO Bias: Top results are often just well-optimized.

- Ads First: Commercial interests skew the advice.

- Zero Empathy: It’s a cold database approach.

For cloud business leaders, this is legacy tech.

It’s like running on-prem servers in 2026.

It works, but it’s inefficient.

It lacks intelligence.

We need more.

We need automation.

We need context.

The Rise of Generative AI in Health (ChatGPT)

The Conversational Revolution

Then came the LLMs.

ChatGPT changed the game.

You don’t search keywords.

You describe a situation.

“I have a headache, but I also skipped coffee.”

ChatGPT gets it.

“It might be caffeine withdrawal.”

This is contextual health advice chatbot technology.

It synthesizes information.

It mimics a consult.

Personalized Health Information AI

Imagine the power.

An AI that knows your baseline.

It processes complex queries.

It can read medical reports (with privacy tools).

It explains jargon in plain English.

This is the promise of General AI.

It feels like magic.

But software engineers know better.

It’s probability, not magic.

It’s next-token prediction.

ChatGPT’s Edge:

- Synthesis: It reads multiple sources for you.

- Tone: It can be empathetic and calm.

- Follow-up: You can ask clarifying questions.

- Speed: Instant answers, no clicking links.

But here is the danger.

ChatGPT medical accuracy is not perfect.

It can hallucinate.

It can sound confident while being wrong.

For a DevOps engineer, it’s like a buggy deploy.

It looks fine, but the backend is on fire.

We must trust but verify.

Comparative Analysis: The Showdown

Accuracy vs. Accessibility

Let’s look at the data.

Dr. Google gives you sources.

You can verify the source.

ChatGPT gives you an answer.

The source is often opaque.

Which is better?

| Feature | Dr. Google (Search) | ChatGPT (GenAI) |

|---|---|---|

| Input | Keywords / Fragments | Natural Language / Context |

| Output | List of Links (URL List) | Synthesized Answer |

| Context | None (Session-agnostic) | High (Session-aware) |

| Source Transparency | High (You see the URL) | Low (Often hidden) |

| Hallucination Risk | Low (Content exists) | High (AI invents facts) |

| User Burden | High (Must read/filter) | Low (Just read answer) |

The Privacy Paradox

This is huge for Cyber Security.

When you Google, you track clicks.

Ad networks build a profile.

When you chat, you share thoughts.

You share symptoms.

You might share too much.

Devolity Hosting experts warn about this.

Where does that data go?

Is it training the next model?

Is it compliant with HIPAA?

If you use public AI, assume it’s public.

Secure, private AI instances are the only path.

Context is King

Dr. Google doesn’t know you are a runner.

It doesn’t know you take beta-blockers.

ChatGPT can know this.

If you provide context, the advice shifts.

“My knee hurts” vs “My knee hurts after a 5k.”

The contextual health advice chatbot wins here.

It tailors the output.

It acts like a triage nurse.

It’s functional.

It’s efficient.

It’s the future of Automation.

Technical Case Study: Building a Secure Health Bot

The Problem: Data Silos and Insecurity

Let’s get technical.

Real-world example time.

A healthcare provider wanted an AI assistant.

They had thousands of PDFs.

Patient guidelines, drug info, protocols.

The “Before” State:

- Doctors searched internal wikis.

- Keywords failed (e.g., “heart” vs “cardiac”).

- Patients called support for basic info.

- Data leaked via insecure email chains.

- No Cyber Security governance.

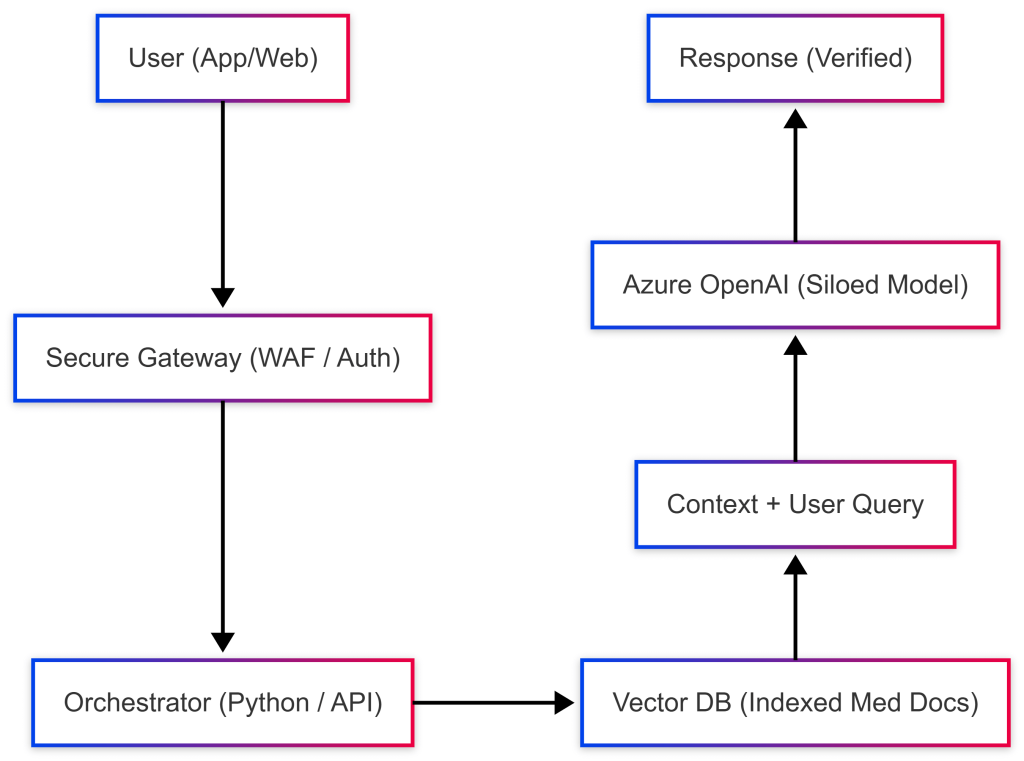

The Solution: RAG Architecture on Cloud

We built a Retreival-Augmented Generation (RAG) system.

We used Azure Cloud and Terraform.

We didn’t just unleash a bot.

We grounded it.

The Tech Stack:

- Infrastructure: Terraform for IaC (Infrastructure as Code).

- Cloud: Azure OpenAI Service (Private networking).

- Vector DB: Stores embeddings of medical docs.

- Orchestration: Python-based backend.

- Security: Private endpoints, RBAC, Data Loss Prevention.

The “After” Workflow

- User asks: “What is the dose for Drug X?”

- System: Embeds query.

- Search: Finds relevant secure docs in Vector DB.

- AI: Synthesizes answer only from those docs.

- Output: Verified answer with citations.

Architecture Diagram

Key Wins

- Zero Hallucinations: AI is restricted to internal data.

- 100% Privacy: Data never leaves the VPC.

- Speed: Answers in milliseconds.

- Scalability: Auto-scaling with DevOps pipelines.

This is AI vs traditional search for health in practice.

It’s better.

It’s what Devolity Business Solutions delivers.

Implementation Detail: Securing the Pipe with Terraform

For the DevOps engineers and architects reading this, let’s look at the “How”.

Security isn’t a policy document; it’s code.

To ensure HIPAA/GDPR compliance, we don’t manually click buttons in the Azure Portal.

We use Terraform to lock down the infrastructure.

The “Private Endpoint” Strategy: A common vulnerability is exposing the AI API to the public internet.

We block that.

Here is a snippet of the Infrastructure as Code (IaC) configuration:

resource "azurerm_cognitive_account" "secure_ai" {

name = "devolity-secure-health-ai"

location = azurerm_resource_group.rg.location

resource_group_name = azurerm_resource_group.rg.name

kind = "OpenAI"

sku_name = "S0"

public_network_access_enabled = false # CRITICAL: No Public Internet Access

}

resource "azurerm_private_endpoint" "ai_endpoint" {

name = "pe-health-ai"

location = azurerm_resource_group.rg.location

resource_group_name = azurerm_resource_group.rg.name

subnet_id = azurerm_subnet.private.id

private_service_connection {

name = "psc-health-ai"

private_connection_resource_id = azurerm_cognitive_account.secure_ai.id

subresource_names = ["account"]

is_manual_connection = false

}

}

Why this code matters for Health:

- Isolation: Traffic stays primarily on the Azure backbone.

- Compliance: It satisfies audit requirements for “Data Loss Prevention.”

- Governance: Devolity uses RBAC (Role-Based Access Control) so developers can’t access patient data, only the system can.

This level of rigor allows us to use Automation safely.

It bridges the gap between a “cool AI demo” and a “clinical-grade tool.”

Troubleshooting Guide: Navigating Digital Health Risks

When you deploy AI for health or even just use Dr. Google, things break.

Information gets twisted.

Privacy gets breached.

Here is your guide to fixing it.

This is for the IT admin, the provider, and the smart patient.

Common Symptoms and Solutions

| Symptom | Root Cause | Solution |

|---|---|---|

| “The AI invented a medical study.” | Hallucination. The LLM prioritized fluency over fact. | Implement RAG. Ground the AI in a verified vector database (like in our case study). Never use base models for medical advice. |

| “I see ads for my condition everywhere.” | Tracker Leakage. Cookies and pixels shared your search data. | Use Privacy Browsers or VPNs. For enterprise, use Private Search instances that do not log queries. |

| “The chatbot is too slow (Latency).” | Cold Starts / Heavy Compute. The model is too large or unoptimized. | Optimize Infrastructure. Use AWS Cloud provisioned IOPS or Azure dedicated throughput units. |

| “My health data is in a public log.” | Misconfigured ACLs. Access Control Lists were too permissive. | Audit with Terraform. hard-code security policies. Use “Least Privilege” access principles. |

| “The advice contradicts itself.” | Data Conflicts. The training data contained opposing views. | Curate the Knowledge Base. Manually review the documents fed into your RAG system. |

Pro Tip for DevOps

Monitoring is key.

Don’t just monitor uptime.

Monitor semantic drift.

If your AI starts answering differently, know why.

Use tools like Prometheus or Azure Monitor for your AI endpoints.

How Devolity Business Solutions Optimizes Your Health AI

Your Partner in Secure Innovation

You have the vision.

“I want a secure, AI-driven patient portal.”

“I want to automate triage.”

But the execution is hard.

Regulatory compliance is a minefield.

Data gravity is real.

This is where Devolity Business Solutions steps in.

Why Choose Devolity?

We don’t just “do cloud.”

We “do secure, compliant, intelligent cloud.”

We are experts in Devolity Hosting for high-compliance industries.

Our Expertise:

- Infrastructure as Code: We build repeatable, secure environments using Terraform and Ansible.

- Cloud Agnostic: Whether you use AWS Cloud, Azure Cloud, or Google Cloud, we optimize it.

- Security First: We integrate Cyber Security at every layer. WAFs, encryption, identity management.

- AI Readiness: We prepare your data. We build the pipelines that feed your models.

Real-World Impact

We helped a mid-sized clinic move from paper to a secure cloud app.

We reduced their “search time” by 70%.

We secured their patient data with military-grade encryption.

We didn’t just install software.

We transformed their business process.

Ready to build the future of health?

Devolity is your architect.

The Future of Health AI: Predictions for 2030

Beyond Chatbots: The Rise of Digital Twins

We are just at the beginning.

Comparing Dr. Google to ChatGPT is like comparing a map to a GPS.

But the future?

The future is a self-driving car.

We predict the rise of Medical Digital Twins.

Imagine a virtual copy of your physiology.

It lives in a secure cloud container.

It is fed by your wearables (Apple Watch, Oura Ring).

It is analyzed by continuous AI agents.

You don’t ask “I have a headache.”

The AI alerts you: “Your hydration is low, and your BP is rising. Drink water.”

This is proactive, not reactive.

The Integration of Genomics and AI

Dr. Google knows generalities.

“Coffee is good for you.”

“Coffee is bad for you.”

It’s confusing.

Future AI will know your genes.

“You metabolize caffeine slowly. Avoid it after 2 PM.”

This Genetic-AI-Cloud convergence is massive.

It requires massive compute.

It requires petabytes of secure storage.

Who will host this?

Cloud Providers like AWS and Azure.

Who will manage the security?

Experts like Devolity Business Solutions.

The End of the “Second Opinion”?

Will AI replace doctors?

No.

But it might replace the need for a second opinion on basic diagnostics.

If an AI trains on 10 million cases, its error rate drops below human levels.

The role of the doctor shifts.

They become the “Human in the Loop” (HITL).

They verify the AI’s logic.

They provide the compassion.

The AI provides the data processing.

This partnership is the Gold Standard of future healthcare.

Security Challenges Ahead

As AI gets smarter, attacks get smarter.

Adversarial Attacks on medical AI will rise.

Hackers might try to poison the training data.

They might try to alter a Digital Twin to trigger a wrong prescription.

The war for Cyber Security in health is just starting.

Your infrastructure must be Quantum-Resistant.

It must be resilient.

It must be monitored 24/7.

This is why “DIY” health IT is dying.

You need managed, fortified solutions.

Conclusion

The verdict is in.

Dr. Google was the pioneer.

It democratized information.

But ChatGPT and Generative AI are the experts.

They democratize understanding.

They turn data into advice.

But with great power comes great responsibility.

(Yes, a cliché, but true).

ChatGPT medical accuracy is the hurdle.

Privacy is the wall.

You can clear the hurdle and scale the wall.

But you need the right gear.

You need secure cloud infrastructure.

You need DevOps discipline.

You need a partner like Devolity Business Solutions.

Don’t let your health data drift in the digital wind.

Anchor it with secure AI.

Be the informed patient.

Be the responsible leader.

The future of health is not just searching.

It is solving.

Ready to secure your AI infrastructure?

Contact Devolity Business Solutions Today and build with confidence.

FAQs: Dr. Google vs ChatGPT

Q1: Is ChatGPT better than Google for medical diagnosis?

A1: ChatGPT is better at explaining concepts and synthesizing information, but it can hallucinate. Google provides direct sources but requires you to filter them. Neither replaces a doctor.

Q2: How can I use AI for health privately?

A2: Use enterprise-grade, private AI instances (like Azure OpenAI) that do not use your data for training. Avoid free, public chatbots for sensitive data.

Q3: What role does cloud computing play in health AI?

A3: AWS Cloud and Azure provide the massive compute power needed to run LLMs and the security frameworks to protect patient data (HIPAA compliance).

Q4: Can Devolity Business Solutions build a health chatbot?

A4: Yes. We specialize in building the secure infrastructure (using Terraform and DevOps best practices) required to host and run compliant health AI applications.

Q5: Why is “Context” so important in AI health?

A5: Context allows the AI to tailor advice to your age, history, and medications, avoiding generic or dangerous suggestions that standard search engines might offer.

References & Extras

Authoritative Sources

- World Health Organization – Digital Health

- Red Hat – Enterprise Open Source for Healthcare

- Microsoft Azure for Healthcare

- AWS Healthcare & Life Sciences

- Terraform by HashiCorp – Infrastructure as Code

- Google Cloud Healthcare API

Transform Business with Cloud

Devolity simplifies state management with automation, strong security, and detailed auditing.